- Home

- Publications

- PAGES Magazine

- New PMIP Challenges: Simulations of Deglaciations and Abrupt Earth System Changes

New PMIP challenges: Simulations of deglaciations and abrupt Earth system changes

Ivanovic RF, Capron E and Gregoire LJ

Past Global Changes Magazine

29(2)

78-79

2021

Recent cold-to-warm climate transitions present one of the hardest tests of our knowledge of environmental processes. In coordinating transient experiments of these elusive events, the Deglaciations and Abrupt Changes (DeglAC) Working Group is finding new ways to understand climate change.

Our journey to the present

In December 2010, amidst mountains of tofu, late-night karaoke stardom, and restorative trips to the local onsen (hot springs), the PMIP3 meeting in Kyoto, Japan (pastglobalchanges.org/calendar/128657), was in full swing. Results were emerging from transient simulations of the last 21,000 years attempting to capture both the gradual deglaciation towards present day climes and the abrupt aberrations that punctuate the longer-term trend. However, most of these experiments had employed different boundary conditions, and the models were showing different sensitivities to the imposed forcings. It was proposed that to better understand the last deglaciation, we should pool resources and develop a multi-model intercomparison project (MIP) for transient simulations of the period. This was a new kind of challenge for PMIP, which previously had focused mainly on equilibrium-type simulations (the last millennium experiment is a notable exception) of up to a few thousand years in duration. Fast forward to the present: DeglAC has its first results from its last deglaciation simulations. Eleven models of varying complexity and resolution have completed 21–15 kyr BP, with five of those running to 1950 CE or into the future.

Defining a flexible protocol

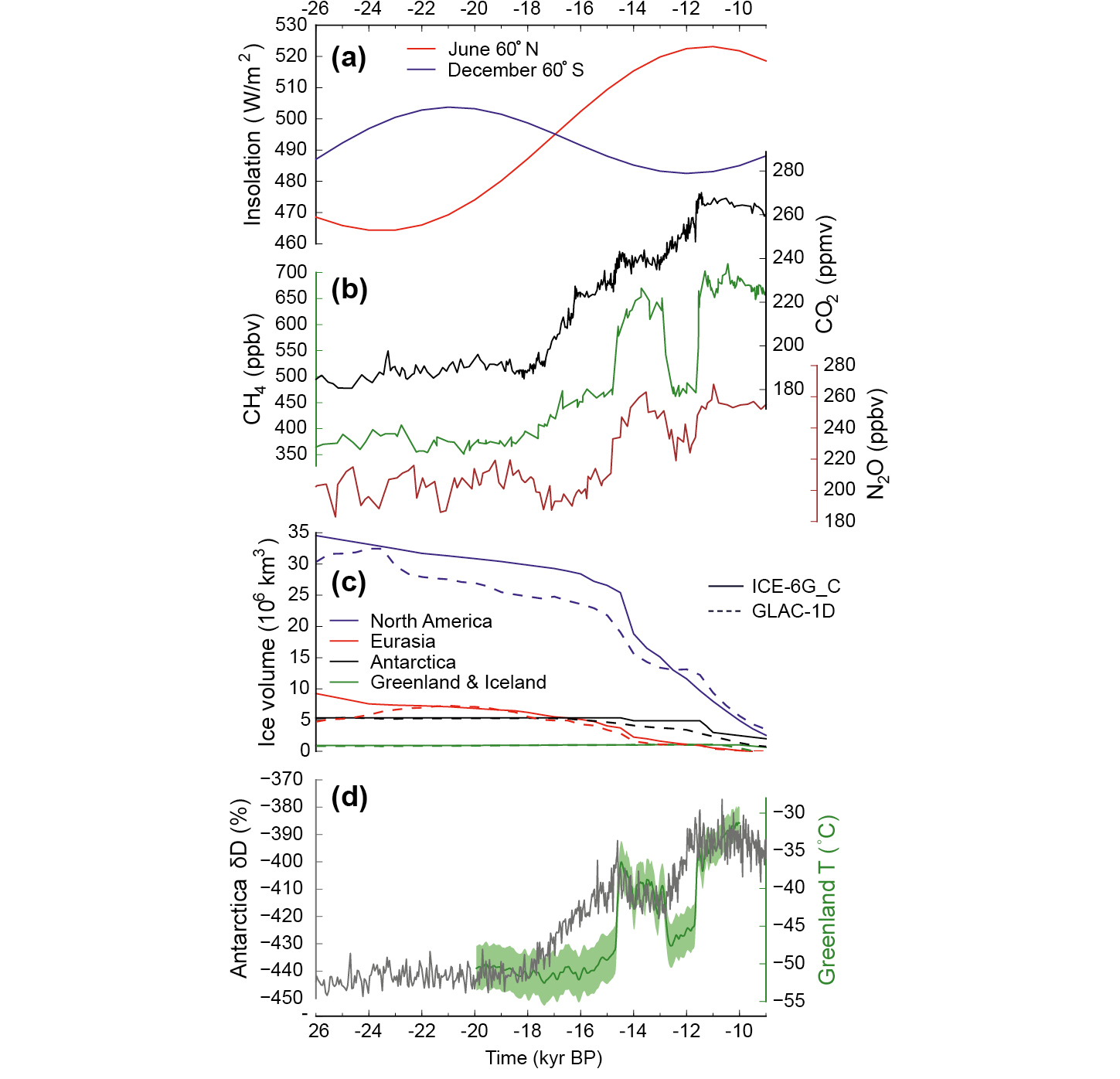

Real headway was made in 2014, when the PMIP3 meeting in Namur, Belgium (pastglobalchanges.org/calendar/128658), marked the inauguration of the DeglAC Working Group. That summer, the leaders of the Working Group made an open call for state-of-the-art, global ice-sheet reconstructions spanning 26–0 kyr BP, and two were provided: GLAC-1D and ICE-6G_C (VM5a). For orbital forcing, we adopted solutions consistent with previous PMIP endeavors, but the history of atmospheric trace gases posed some interesting questions. For instance, the incorporation of a new high-resolution record in a segment of the longer atmospheric CO2 composite curve (Bereiter et al. 2015) raised fears of runaway terrestrial feedbacks in the models and artificial spikiness from sampling frequency. A hot debate continued over the appropriate temporal resolution to prescribe, and in the end, we left it up to individual modeling groups whether to prescribe the forcing at the published resolution, produce a spline through the discrete points, or interpolate between data, as needed. See Figure 1 for an overview of the experiment forcings and Ivanovic et al. (2016) for protocol details and references.

The elephant in the room was what to do with ice-sheet melting. It is well known that the location, rate, and timing of freshwater forcing is critical for determining its impact on modeled ocean circulation and climate (and the impact can be large, e.g. Roche et al. 2011; Condron and Winsor 2012; Ivanovic et al. 2017). Yet, these parameters remain mostly uncertain, especially at the level of the spatial and temporal detail required by the models.

We explored a contentious proposal to set target ocean and climate conditions instead of an ice-sheet meltwater protocol. Many models have different sensitivities to freshwater (Kageyama et al. 2013), and it was strongly suspected that imposing freshwater fluxes consistent with ice-sheet reconstructions would confound efforts to produce observed millennial-scale climate events (Bethke et al. 2012). Thus, specifying the ocean and climate conditions to be reproduced by the participant models would encourage groups to employ whatever forcing was necessary to simulate the recorded events. However, the more traditional MIP philosophy is to use tightly prescribed boundary conditions to enable a direct inter-model comparison of sensitivity to those forcings, as well as evaluation of model performance and simulated processes against paleorecords. If the forcings are instead tuned to produce a target climate/ocean, then by definition of the experimental design, the models will have been conditioned to get at least some aspect of the climate "right", reducing the predictive value of the result. Although useful for examining the climate response to the target condition, and for driving offline models of other Earth system components (e.g. ice sheets, biosphere, etc.), we already know that this approach risks requiring unrealistic combinations of boundary conditions, which complicates the analyses and may undermine some of the simulated interactions and teleconnections. Ultimately, the complex multiplicity in the interpretation of paleorecords made it too controversial to set definitive target ocean and climate states in the protocol. Therefore, we recommended the prescription of freshwater forcing consistent with ice-sheet evolution, and allowed complete flexibility for groups to pursue any preferred scenario(s).

Such flexibility in uncertain boundary conditions is not the common way to design a paleo MIP, but this less traditional method is eminently useful. First and foremost, not being too rigid on model boundary conditions allows for the use of the model ensemble for informally examining uncertainties in deglacial forcings, mechanisms, and feedbacks. There are also technical advantages: the last deglaciation is a very difficult simulation to set up, and can take anything from a month to several years of continuous computer run-time. Thus, allowing flexibility in the protocol enables participation from the widest possible range of models. Moreover, even a strict prescription of boundary conditions does not account for differences in the way those datasets can be implemented in different models, which inevitably leads to divergence in the simulation architecture. In designing a relatively open MIP protocol, our intention was to facilitate the undertaking of the most interesting and useful science. The approach will be developed in future iterations based on its success.

Non-linearity and mechanisms of abrupt change

One further paradigm to confront comes from the indication that rapid reorganizations in Atlantic Overturning Circulation may be triggered by passing through a window of instability in the model—e.g. by hitting a sweet-spot in the combination of model inputs (model boundary conditions and parameter values) and the model's background climate condition—and by spontaneous or externally-triggered oscillations arising due to internal variability in ocean conditions (see reviews by Li and Born 2019; Menviel et al. 2020). The resulting abrupt surface warmings and coolings are analogous to Dansgaard-Oeschger cycles, the period from Heinrich Stadial 1 to the Bølling-Allerød warming, and the Younger Dryas. However, the precise mechanisms underpinning the modeled events remain elusive, and it is clear that they arise under different conditions in different models. These findings open up the compelling likelihood that rapid changes are caused by non-linear feedbacks in a partially chaotic climate system, raising the distinct possibility that no model version could accurately predict the full characteristics of the observed abrupt events at exactly the right time in response to known environmental conditions.

Broader working group activities

Within the DeglAC MIP, we have several sub-working groups using a variety of climate and Earth System models to address key research questions on climate change. Alongside the PMIP last deglaciation experiment, these groups focus on: the Last Glacial Maximum (21 kyr BP; Kageyama et al. 2021), the carbon cycle (Lhardy et al. 2021), ice-sheet uncertainties (Abe-Ouchi et al. 2015), and the penultimate deglaciation (138–128 kyr BP; Menviel et al. 2019). However, none of this would be meaningful, or even possible, without the full integration of new data acquisition on climatic archives and paleodata synthesis efforts. Our communities aim to work alongside each other from the first point of MIP conception, to the final evaluation of model output.

|

|

Figure 2: Five sources of high-level quantitative and qualitative uncertainty to address using transient simulations of past climate change. |

Looking ahead and embracing uncertainty

At the time of writing, 19 transient simulations of the last deglaciation have been completed covering ca. 21–11 kyr BP. In the next phase (multi-model analysis of these results) transient model-observation comparisons may present the most ambitious strand of DeglAC's work. Our attention is increasingly turning towards the necessity of untangling the chain of environmental changes recorded in spatially-disparate paleoclimate archives across the Earth system. We need to move towards an approach that explicitly incorporates uncertainty (Fig. 2) into our model analysis (including comparison to paleoarchives), hypothesis testing, and future iterations of the experiment design. Hence, the long-standing, emblematic "PMIP triangle" (Haywood et al. 2013) has been reformulated into a pentagram of uncertainty, appropriate for a multi-model examination of major long-term and abrupt climate transitions.

The work is exciting, providing copious model output for exploring Earth system evolution on orbital to sub-millennial timescales. As envisaged in Japan 11 years ago, pooling our efforts is unlocking new ways of thinking that test established understanding of transient climate changes and how to approach simulating them. At the crux of this research is a nagging question: while there are such large uncertainties in key boundary conditions, and while models all have wide variability in their sensitivity to forcings and sweet-spot conditioning for producing abrupt changes, is there even a possibility that the real history of Earth's paleoclimate events can be simulated? It is time to up our game, to formally embrace uncertainty as being fundamentally scientific (Ivanovic and Freer 2009), and to build a new framework that capitalizes on the plurality of plausible climate histories for understanding environmental change.

affiliations

1School of Earth and Environment, University of Leeds, UK

2Institut des Geosciences de l'Environnement, Université Grenoble Alpes, CNRS, France

contact

Ruza Ivanovic: R.Ivanovic leeds.ac.uk

leeds.ac.uk

references

Abe-Ouchi A et al. (2015) Geosci Model Dev 8: 3621-3637

Bereiter B et al. (2015) Geophys Res Lett 42: 2014GL061957

Bethke I et al. (2012) Paleoceanography 27: PA2205

Condron A, Winsor P (2012) Proc Natl Acad Sci USA 109: 19928-19933

Haywood AM et al. (2013) Clim Past 9: 191-209

Ivanovic RF, Freer JE (2009) Hydrol Process 23: 2549-2554

Ivanovic RF et al. (2016) Geosci Model Dev 9: 2563-2587

Ivanovic RF et al. (2017) Geophys Res Lett 44: 383-392

Kageyama M et al. (2013) Clim Past 9: 935-953

Kageyama M et al. (2021) Clim Past 17: 1065-1089

Lhardy F et al. (2021) In: Earth and Space Science Open Archive, http://www.essoar.org/doi/10.1002/essoar.10507007.1. Accessed 20 May 2021

Li C, Born A (2019) Quat Sci Rev 203: 1-20

Menviel L et al. (2019) Geosci Model Dev 12: 3649-3685