- Home

- Taxonomy

- Term

- PAGES Magazine Articles

PAGES Magazine articles

Publications

PAGES Magazine articles

2013

PAGES news

James D. Annan1, M. Crucifix2, T.L. Edwards3 and A. Paul4

In addition to improving the simulations of climate states, data assimilation concepts can also be used to estimate the internal parameters of climate models. Here we introduce some of the ideas behind this approach, and discuss some applications in the paleoclimate domain.

Estimation of model parameter values is of particular interest in paleoclimate and climate change research, since it is the formulation of model parameterizations, rather than the initial conditions, which is the main source of uncertainty regarding the climate’s long-term response to natural and anthropogenic forcings.

We should recognize at the outset that the question of a “correct” parameter value might in many cases be quite contentious and disputable. There is, for example, no single value to describe the speed at which ice crystals fall through the atmosphere, or the background rate of mixing in the ocean, to mention two parameters which are commonly varied in General Circulation Models (GCMs). Generally the best we can hope for is to find a set of parameter values, which perform well in a range of circumstances, and to make allowances for the model’s inadequacies, i.e. structural errors due to inadequate equations and parameterizations. However, inadequacies will always be present no matter how carefully parameter values are chosen: this should serve as a caution against over-tuning.

It may not be immediately clear how one can use proxy-derived observational estimates of climatic state variables such as temperature or precipitation to estimate the values of a model’s internal parameters. However, from a sufficiently abstract perspective, the problem of parameter estimation can be considered as equivalent to state estimation, via a standard approach in which the state space of a dynamical model is augmented by the inclusion of model parameters (Jazwinski 1970; Evensen et al. 1998). To see how this works, consider a system described by a dynamical model f, which uses a set of internal parameters θ and propagates a state vector x through time through a set of differential equations:

x = fθ(x) (1)

We can create an equivalent model g(x, θ) which takes as its state vector (x, θ) (in which the parameter values have simply been concatenated onto the end of the state vector), and propagates this vector through the augmented set of equations

x = fθ(x) (2)

θ = 0 (3)

Thus, the existing methods and technology for estimating the state x can, in principle, be directly applied to the estimation of (x, θ), or in other words, the joint estimation of state and parameters.

While this approach is conceptually straightforward, there are many practical difficulties in its application. The most widespread methods for data assimilation, including both Kalman filtering and 4D-VAR, rely on (quasi-)linear and Gaussian approaches. However, the augmented model g is likely to be substantially more nonlinear in its inputs than the underlying model f , due to the presence of product terms such as θi xj (Evensen et al. 1998).

Further challenges exist in applying this approach due to the wide disparity in relevant time scales. Often the initial state has a rapid effect on the model trajectory within the predictability time scale of the model, which is typically days to weeks for atmospheric GCMs. On the other hand, the full effect of the parameters only becomes apparent on the climatological time scale, which may be decades or centuries.

Applications

Methods for joint parameter and state estimation in the full spatiotemporal domain continue to be investigated for numerical weather prediction, where data are relatively plentiful. But identifiability, that is the ability to uniquely determine the state and parameters given the observations, is a much larger problem for modeling past climates, where proxy data are relatively sparse in both space and time.

Therefore, data assimilation in paleoclimate research generally finds a way to reduce the dimension of the problem. One such approach is to reduce the spatial dimension, even to the limit of a global average. For example, a three-variable globally averaged conceptual model for glacial cycles has been tuned using flexible and powerful methods such as Markov Chain Monte Carlo (Hargreaves and Annan 2002) and Particle Filtering (Crucifix and Rougier 2009). Figure 1 presents the results of one parameter estimation experiment by Hargreaves and Annan (2002).

In the case of more complex and higher resolution models, the problems of identifiability and computational cost are most commonly addressed by the use of equilibrium states. Here, the full initial condition of the model is irrelevant, at least within reasonable bounds, and the dimension of the problem collapses down to the number of free parameters; typically ten at most, assuming many boundary conditions are not also to be estimated. With this approach, much of the detailed methodology of data assimilation as developed and practiced in numerical weather prediction, where the huge state dimension is a dominant factor, ceases to be so relevant.

While some attempts at using standard data assimilation methods have been performed (e.g. Annan et al. 2005), a much broader range of estimation methods can also be used. With reasonably cheap models and a sufficiently small set of parameters, direct sampling of the parameter space with a large ensemble may be feasible. A statistical emulator, which provides a very fast approximation to running the full model, may help in more computationally demanding cases (e.g. Holden et al. 2010).

One major target of parameter estimation in this field has been the estimation of the equilibrium climate sensitivity. This may either be an explicitly tunable model parameter in the case of simpler models, or else an emergent property of the underlying physical processes, which are parameterized in a more complex global climate model. The Last Glacial Maximum is a particularly popular interval for study, due to its combination of a large signal to noise ratio and good data coverage over a quasi-equilibrium interval (Annan et al. 2005; Schneider von Deimling et al. 2006; Holden et al. 2010; Schmittner et al. 2011; Paul and Losch 2012). The methods used for studying the LGM in order to estimate the equilibrium climate sensitivity have covered a wide range of techniques including direct sampling of parameter spaces (with and without the use of an emulator), Markov Chain Monte Carlo methods, the variational approach using an adjoint model, and the Ensemble Kalman Filter. In general, more costly models require stronger assumptions and approximations due to computational limitations.

Approaches which aim at averaging out the highest frequencies of internal variability while still retaining a transient and time-varying forced response may make use of temporal data such as tree rings over the last few centuries (Hegerl et al. 2006). In that case, the spatial dimension can still be reduced, e.g. by averaging to a hemispheric mean. A similar approach was used by Frank et al. (2010) to estimate the carbon cycle feedback.

Paleoclimate simulations provide the only opportunity to test and critically evaluate climate models under a wide range of boundary conditions. This suggests that we need to continue to develop a broad spectrum of methods to be applied on a case-specific basis.

affiliations

1RIGC/JAMSTEC, Yokohama, Japan; jdannan jamstec.go.jp

jamstec.go.jp

2Georges Lemaitre Centre for Earth and Climate Science, Université Catholique de Louvain, Belgium

3School of Geographical Sciences, Bristol University, UK

4MARUM, University of Bremen, Germany

selected references

Full reference list online under: pastglobalchanges.org/products/newsletters/ref2013_2.pdf

Annan JD et al. (2005) SOLA 1: 181-184

Crucifix M, Rougier J (2009) The European Physical Journal-Special Topics 174(1): 11-31

Hargreaves JC, Annan JD (2002) Climate Dynamics 19: 371-381

Publications

PAGES Magazine articles

2013

PAGES news

Kwasi Appeaning-Addo, S.W. Laryea, B.A. Foli and C.L. Allotey

Accra, Ghana, 8-12 October 2012

The third West African Quaternary Research Association (WAQUA) workshop, hosted by the Department of Marine and Fisheries Sciences, University of Ghana, was held at the Institute for Local Government Studies in Accra. The objective of the workshop was to identify how humans adapted to past climatic and sea level changes, and to discuss future adaptation strategies through a multidisciplinary approach.

Twenty-seven scientists from five countries attended the workshop. The inaugural lecture was given by Dr. Thomas Kwasi Adu of the Geological Survey Department of Ghana. He spoke about the causes of sea-level rise and coastal change, and their implications for coastal regions. His lecture stressed the fact that important planning decisions for sea-level rise should be based on the best available scientific knowledge and careful consideration of long-term benefits for a sustainable future. He recommended that decisions on adaptation or mitigation measures should also take into consideration economic, social, and environmental costs.

Sixteen scientific papers were presented and discussed on various topics covering sea level rise, coastal erosion and climate change issues. In particular, the presentations addressed the impact of sea-level changes on coastal tourism development; the linkages between sea-level rise and ground water quality, hydrodynamics, upwelling and biogeochemistry in the Gulf of Guinea; paleoclimatic evidences from the quaternary coastal deposits from Nigeria; dynamics of ocean surges and their impacts on the Nigerian coastline; and the effect of climatic extreme events on reservoir water storage in the Volta Basin in Ghana.

The workshop provided a platform for scientists to share knowledge and information on their respective areas of research. At the end of the presentation sessions, the plenum agreed that research on sea-level rise should be particularly encouraged and that further activities to bring together scientists working in this area should be organized. To stimulate interest and expose students to new methods, regular international workshops or summer schools will be held to bring together students and experts from within and outside the sub-region.

|

|

Figure 1: This photo from 1985 shows a section of the Keta town destroyed by sea erosion. Photo by Beth Knittle. |

The meeting participants then went on a guided tour of coastal communities along the eastern coast of Ghana. One of them was Keta, a coastal town in the Volta Region that was partly destroyed by sea erosion at the end of the 20th century. Keta is situated on a sandspit separating the Gulf of Guinea from the Keta Lagoon. Due to this double waterfront, the city area is particularly vulnerable to erosion. It is flooded from the ocean front during high tides and from the lagoon front during heavy runoff, especially in the rainy seasons. During devastating erosion events between 1960 and 1980, more than half of the town area has been washed away. The photo (Fig. 1) shows Keta in 1985. Since 1999 more than 80 million US$ have been invested to protect, restore and stabilize the coast of Keta.

The next WAQUA workshop will be held in Senegal in 2014.

acknowledgements

We express our sincere gratitude to INQUA, PAGES, PAST and the University of Ghana for sponsoring this workshop. We are also grateful to the local hosts, the Department of Marine and Fisheries Sciences, University of Ghana and the Ghanaian Institute of Local Government Studies.

affiliation

Department of Marine and Fisheries Sciences, University of Ghana; kappeaning-addo ug.edu.gh

ug.edu.gh

Publications

PAGES Magazine articles

2013

PAGES news

Marie-José Gaillard1 and Qinghai Xu2

Shijazhuang, China, 9-11 October 2012

This workshop was held at Hebei Normal University in China, and contributed to the PAGES Focus 4 theme Land Use and Cover (LUC). A major goal of LUC is to achieve Holocene land-cover reconstructions that can be used for climate modeling and testing hypotheses on past and future effects of anthropogenic land cover on climate. Collaborations initiated between Linnaeus University (Sweden; M.-J Gaillard), University of Hull (Britain, Jane Bunting), Hebei Normal University (China; Q. Xu and Y. Li), the French Institute (Pondicherry, India; A Krishnamurthy) and Lucknow University (India; P. Singh Ranhotra) are in line with the goals of Focus 4-LUC and follow the strategy of the European LUC-relevant LANDCLIM project (Gaillard et al. 2010). The aim of these collaborations is to develop quantitative reconstructions of past vegetation cover in India and China using pollen-vegetation modeling and archaeological/historical data together with other land-cover modelling approaches.

The objective of this workshop in China was to initiate the necessary collaborations and activities to make past land-cover reconstructions possible in Eastern Asia. The three major outcomes of the workshop are (i) the building of a large network of experts from Eastern Asia, Europe and USA now working together to understand past changes in land use and land cover in Eastern Asia, (ii) that all existing East Asian pollen databases will be integrated into the NEOTOMA Paleoecology Database by 2014 (coordinated by Eric Grimm, Illinois State University, USA), and (iii) that a review paper will be prepared with the working title “Past land-use and anthropogenic land-cover change in Eastern Asia - evaluation of current achievements, potentials and limitations, and future avenues”. It is planned to submit this article this fall. It will include 1) a review of Holocene human-induced vegetation and land-use changes in Eastern Asia based on results presented at the workshop; 2) a discussion of the existing anthropogenic land-cover change scenarios (HYDE, Klein Goldewijk et al. 2010; KK 10, Kaplan et al. 2009) in the light of the reviewed proxy-based knowledge; and 3) a discussion on the implications of these results for future climate modeling and the study of past land cover-climate interactions.

These significant outcomes are the result of three intense days of presentations and discussion sessions. During the lectures, results were presented for historical, paleoecological and pollen-based reconstructions; pollen-vegetation relationships; past human-impact studies; and model scenarios of anthropogenic land-cover changes in the past. Some of the conference was also dedicated to reviewing the state of existing pollen databases and more generally, database building.

Plenary and group break-out discussions focused on more technical aspects such as improving pollen databases, especially for the East Asian region; planning the review paper; and scientific topics such as the use of pollen-based and historical land-cover reconstructions for the evaluation of model scenarios of past anthropogenic land-cover change (Fig. 1), and the integration of model scenarios with paleoecological or historical reconstructions.

affiliations

1Department of Biology and Environmental Science, Linnaeus University, Kalmar, Sweden; marie-jose.gaillard-lemdahl lnu.se

lnu.se

2Key Laboratory of Environmental Change and Ecological Construction, Hebei Normal University, Shijiazhuang, China

references

Gaillard MJ et al. (2010) Climate of the Past 6: 483-678

Kaplan J, Krumhardt K, Zimmermann N (2009) Quaternary Science Reviews 28: 3016-3034

Klein Goldewijk K, Beusen A, Janssen P (2010) The Holocene 20: 565-573

Olofsson J, Hickler T (2008) Vegetation History and Archaeobotany 17: 605-615

Publications

PAGES Magazine articles

2013

PAGES news

Anson W. Mackay1, A.W.R. Seddon2,3 and A.G. Baker2

Oxford, UK, 13-14 December 2012

|

|

Figure 1: Flowchart of the selection process for the 50 priority research questions. |

Paleoecological studies provide insights into ecological and evolutionary processes, and help to improve our understanding of past ecosystems and human interactions with the environment. But paleoecologists are often challenged when it comes to processing, presenting and applying their data to improve ecological understanding and inform management decisions (e.g. Froyd and Willis 2008) in a broader context. Participatory exercises in, for example, conservation, plant science, ecology, and marine policy, have developed as an effective and inclusive way to identify key questions and emerging issues in science and policy (Sutherland et al. 2011). With this in mind, we organized the first priority questions exercise in paleoecology with the goal of identifying 50 priority questions to guide the future research agenda of the paleoecology community.

The workshop was held at the Biodiversity Institute of the University of Oxford. Participants included invited experts and selected applicants from an open call. Key funding bodies and stakeholders were also represented at the workshop, including the US NSF, IGBP PAGES, UK NERC, and UK Natural England.

Several months prior to the workshop, suggestions for priority questions had been invited from the wider community via list-servers, mailing lists, society newsletters, and social media, particularly Twitter (@Palaeo50). By the end of October 2012, over 900 questions had been submitted from almost 130 individuals and research groups. Questions were then coded and checked for duplication and meaning, and similar questions were merged. The remaining 800 questions were re-distributed to those who had initially engaged in the process. Participants were asked to vote on their top 50 priority questions.

At the end of November the questions were grouped into 50+ categories, which in turn were allocated to one of six workshop themes to be chaired by an expert: Human-environment interactions in the Anthropocene (Erle Ellis, University of Maryland, USA); Biodiversity, conservation and novel ecosystems (Lindsey Gillson, University of Capetown, South Africa), Biodiversity over long time scales (Kathy Willis, University of Oxford, UK), Ecosystems and biogeochemical cycles (Ed Johnson, University of Calgary, Canada), Quantitative and Qualitative reconstructions (Stephen Juggins, University of Newcastle, UK), and Approaches to paleoecology (John Birks, University of Bergen, Norway).

Each working group also had a co-chair, responsible for recording votes and editing questions on a spreadsheet, and a scribe. Workshop participants were allocated into one of six parallel working groups tasked with reducing the number of questions from 180 to 30 by the end of day one. This was an intensive process involving considerable debate and editing. During day two, these 30 questions were winnowed down further with each group arriving at seven priority questions. The seven questions from each group were then combined to obtain 42 priority questions. Each working group had a further five reserve questions, which everyone voted on in the final plenary. The eight reserve questions that obtained the most votes were selected to complete the list of 50 priority questions.

Working group discussions were often heated and passionate. Compromises won by the chairs and co-chairs were difficult but necessary. It is important that the final 50 priority questions are not seen as a definitive list, but as a starting point for future dialogue and research ideas.

The final list of 50 priority questions and full details of the methodology is currently under review, and the publication will be announced through the PAGES network.

acknowledgements

We thank PAGES for generously providing support. We also acknowledge sponsorship from the British Ecological Society, the Quaternary Research Association and the Biodiversity Institute, University of Oxford.

affiliations

1Environmental Change Research Centre, University College London, UK; a.mackay ucl.ac.uk

ucl.ac.uk

2Oxford Long-Term Ecology Laboratory, University of Oxford, UK

3Department of Biology, University of Bergen, Norway

references

Froyd CA, Willis KJ (2008) Quaternary Science Reviews 27: 1723-1732

Sutherland WJ et al. (2011) Methods in Ecology and Evolution 2: 238-247

Publications

PAGES Magazine articles

2013

PAGES news

Rainer Zahn1, W.P.M. de Ruijter2, L. Beal3, A. Biastoch4 and SCOR/WCRP/IAPSO Working Group 1365

Stellenbosch, Republic of South Africa, 8-12 October 2012

This conference was held in recognition of the significance of the Agulhas Current to ocean physical circulation, marine biology and ecology, and climate at the regional to global scale. The conference centered on the dynamics of the Agulhas Current in the present and the geological past; the influence of the current on weather, ecosystems, and fisheries; and the impact of the Agulhas Current on ocean circulation and climate with a notable focus on the Atlantic Meridional Overturning Circulation (AMOC). 108 participants from 20 countries, including seven African countries, attended the conference, of which a quarter were young researchers at the PhD student level. Participants came from the areas of ocean and climate modeling, physical and biological oceanography, marine ecology, paleoceanography, meteorology, and marine and terrestrial paleoclimatology. The Agulhas Current attracts interest from these communities because of its significance to a wide range of climatic, biological and societal issues.

The current sends waters from the Indian Ocean to the South Atlantic. This is thought to modulate convective activity in the North Atlantic. It is possible that it even stabilizes the AMOC at times of global warming when freshwater perturbation in the North might weaken it. But these feedbacks are not easy to trace, and direct observations and climate models have been the only way to indicate the possible existence of such far-field teleconnections to date. This is where marine paleo-proxy profiles prove helpful. They reveal the functioning of the Agulhas Current under a far larger array of climatic boundary conditions than those present during the short period of instrumental observations. For instance altered conditions in the past with shifted ocean circulation and wind fields stimulated Agulhas water transports from the Indian Ocean to the Atlantic, the so-called Agulhas leakage, at very different rates from today’s. A number of the paleo-records that were shown at the meeting demonstrated a link between Agulhas leakage and Dansgaard/Oeschger-type abrupt climate changes in the North Atlantic region, suggesting that salt-water leakage may have played a role in strengthening the AMOC and sudden climate warming in the North.

Marine ecosystems were also shown to be measurably impacted by the Agulhas system. Notably, the high variability associated with the prominence of mesoscale eddies and dipoles along the Current affect plankton communities, large predators, pelagic fish stocks, and possibly even facilitate the northward sardine runs swimming against the vigorous southward flow of the Agulhas Current.

The meteorological relevance of the Agulhas Current was also demonstrated, for example its role as a prominent source of atmospheric heat and its significance in maintaining and anchoring storm tracks. Among other things, these affect the atmospheric westerly Polar Front Jet and Mascarene High, with onward consequences for regional weather patterns, including extreme rainfall events over South Africa.

The conference developed a number of recommendations; two key ones being that efforts should be made to trace the impacts of the Agulhas leakage on the changing global climate system at a range of timescales, and that sustained observations of the Agulhas system should be developed. Implementing these recommendations would constitute a major challenge logistically and the Western Indian Ocean Sustainable Ecosystem Alliance (WIOSEA) was identified as a possible integrating platform for the cooperation of international and regional scientists toward these goals. This would involve capacity building and training regional technicians and scientists, which could be coordinated through partnerships with the National Research Foundation in South Africa.

The conference was held under the auspices of the American Geophysical Union Chapman Conference series and organized by the Scientific Committee on Oceanic Research (SCOR)/ World Climate Research Program (WCRP)/ International Association for the Physical Sciences of the Oceans (IAPSO) Working Group 136. Additional sponsorship came from the International Union of Geodesy and Geophysics; US NSF; NOAA; PAGES; Institute of Research for Development (IRD) France; and the Royal Netherlands Institute for Sea Research.

affiliations

1Institut de Ciència i Tecnologia Ambientals, Universitat Autònoma de Barcelona, Bellaterra, Spain; rainer.zahn uab.cat

uab.cat

2Institute for Marine and Atmospheric Research, Utrecht University, The Netherlands

3Rosenstiel School of Marine and Atmospheric Science, University of Miami, USA

4GEOMAR Helmholtz Centre for Ocean Research, Kiel, Germany

5www.scor-int.org/Working_Groups/wg136.htm

references

Biastoch A, Böning CW, Schwarzkopf FU, Lutjeharms JRE (2009) Nature 462: 495-499

Publications

PAGES Magazine articles

2013

PAGES news

|

Jérôme Chappellaz1, Eric Wolff2 and Ed Brook3

Presqu’île de Giens, France, 1-5 October 2012

IPICS (International Partnerships in Ice Core Sciences) is the key planning group for international ice core scientists. Established in 2005, it now includes scientists from 22 nations and aims at defining the scientific priorities of the ice core community for the coming decade. IPICS lies under the common umbrella of IGBP/PAGES, SCAR (Scientific Committee on Antarctic Research) and IACS (International Association of Cryospheric Sciences).

IPIC’s First Open Science conference, organized by the European branch of IPICS (EuroPICS), took place in a beautiful setting on the French Côte d’Azur. 230 scientists gathered from 23 nations, with a good mix of both junior and senior scientists present. While most of the participants work on ice cores, a significant number were scientists working on marine and continental records as well as on climate modeling. The sponsorship received from several institutions, agencies and projects, enabled us to invite ten keynote speakers as well as six scientists from emerging countries.

The program followed IPIC’s main scientific objectives as outlined in four white papers (www.pages-igbp.org/ipics). Notably, it covered questions of climate variability at different time scales (from the last 2000 to 1 M years), biogeochemical cycles, dating, and ice dynamics. New challenges, such as studying the bacterial content of ice cores, and new methodologies were also the focal point of specific sessions. Over the five days of the conference, all attendees gathered for the plenary sessions combined with long poster sessions. These sessions offered valuable and efficient networking opportunities. The full program can be found at: www.ipics2012.org

Among the various results presented at this occasion, significant information was provided on two big recent projects of the ice core community: the WAIS Divide deep drilling in West Antarctica, and the NEEM deep drilling in North-West Greenland. Ice core projects from outside polar regions were also well represented, with results obtained from the Andes, the Alps and the Himalayas.

The beautiful and peaceful setting of the conference center enabled strong and efficient networking; no doubt, in the future we will see that many new ice core drilling projects had their roots at IPICS’ 1st OSC. Proving that ice core scientific outputs remain of prime importance to high-impact journals, the Chief Editor of Nature as well as an editor of Nature Geoscience attended the full five days of the event.

IPICS’ next OSC will take place in 2016. An open call for bids to organize it will be launched in 2013.

acknowledgements

The organizers thank the generous financial support of many sponsors: European Descartes Prize of the EPICA project, ERC project ICE&LASERS (Grant # 291062), LabEX OSUG@2020 project of the Grenoble Observatory for Earth Sciences and Astronomy, European Polar Board, SCAR, PAGES, IACS, Aix-Marseille University, Versailles – St Quentin University, Fédération de Recherche ECCOREV, IRD, LGGE, LSCE, Picarro, LabEX project L-IPSL, Collège de France and IPEV. Efficient administrative and financial handling of the conference was provided by the Floralis company. Special thanks to Stéphanie Lamarque and Audrey Maljean from Floralis for their help over the two years of conference preparation.

affiliations

1Laboratoire de Glaciologie et Géophysique de l’Environnement, Université Joseph Fourier Grenoble, St Martin d’Hères, France; jerome lgge.obs.ujf-grenoble.fr

lgge.obs.ujf-grenoble.fr

2British Antarctic Survey, Cambridge, UK

3College of Earth, Ocean, and Atmospheric Sciences, Oregon State University, Corvallis, USA

references

Publications

PAGES Magazine articles

2013

PAGES news

Diego Navarro1 and Steve Juggins2

Isle of Cumbrae, Scotland, 16-20 August 2012

|

|

Figure 1: Participants during the R workshop. Photo by S. Juggins. |

Paleolimnology has grown rapidly over the last two or three decades in terms of the number of physical, chemical, and biological indicators analyzed and the quantity, diversity and quality of data generated. Such growth has presented paleolimnologists with the challenge of dealing with highly quantitative, complex, and multivariate data to document the timing and magnitude of past changes in aquatic systems, and to understand the internal and external forcing of these changes. To cope with such tasks paleolimnologists continuously add new and more sophisticated numerical and statistical methods to help in the collection, assessment, summary, analysis, interpretation, and communication of data. Birks et al. (2012) summarize the history of the development of quantitative paleolimnology and provide an update to analytical and statistical techniques currently used in paleolimnology and paleoecology.

R is both a programming language and a complete statistical and graphical programming environment. Its use has become popular because it is a free and open-source application, but above all because its capability is continuously enhanced by new and diverse packages developed and generously provided by a large community of scientists.

This recent workshop, held in the comfortable facilities of the Millport Marine Biological Station, trained researchers on the theory and practice of analyzing paleolimnological data using R. The course was led by Steve Juggins (Newcastle University) and Gavin Simpson (University College London, recently moved to the University of Regina), two of the researchers that have contributed in the development and application of different statistical tools and packages for paleoecology within the R community.

A total of 31 participants from a range of continents (North and South America, Europe, Asia, and Africa), career stages (PhD students to faculty) and scientific backgrounds (paleolimnology, palynology, diatoms, chironomids, sedimentology) enjoyed four long days of training in statistical tools and working on their own data. Initially, participants were introduced to R software and language, tools for summarizing data, exploratory data analysis and graphics. The following lectures and practical sessions focused on simple, multiple and modern regression methods; cluster analysis and ordination techniques used to summarize patterns in stratigraphic data; hypothesis testing using permutations for temporal data, age-depth modeling, chronological clustering, smoothing and interpolation of stratigraphic data; and calculation of rates of change. The final lectures dealt with the application of techniques for quantitative environmental reconstructions. The theory and assumptions underpinning each method were introduced in short lectures, after which the students had the opportunity to apply what they had learned, to data sets and real environmental questions, during practical sessions. There was also time in the evenings for sessions on important R tips, advanced R graphics, special topics proposed by the assistants, and for the students to work on their own data.

The course was conveniently organized just prior to the 12th International Paleolimnology Symposium (Glasgow, 20-24 August 2012), which enabled all of the workshop participants to attend the symposium and encouraged further discussions throughout the following week. PAGES covered travel and course costs for five young researchers from developing countries (Turkey, South Africa, Macedonia, and Argentina) all of who were very grateful for the opportunity to attend.

affiliations

1Instituto de Investigaciones Marinas y Costeras, CONICET, Universidad Nacional de Mar del Plata, Argentina; dnavarro conicet.gov.ar

conicet.gov.ar

2School of Geography, Politics and Sociology, Newcastle University, Newcastle upon Tyne, UK

references

Publications

PAGES Magazine articles

2013

PAGES news

Juerg Beer

Workshop of the PAGES Solar Working Group – Davos, Switzerland, 5-7 September 2012

|

|

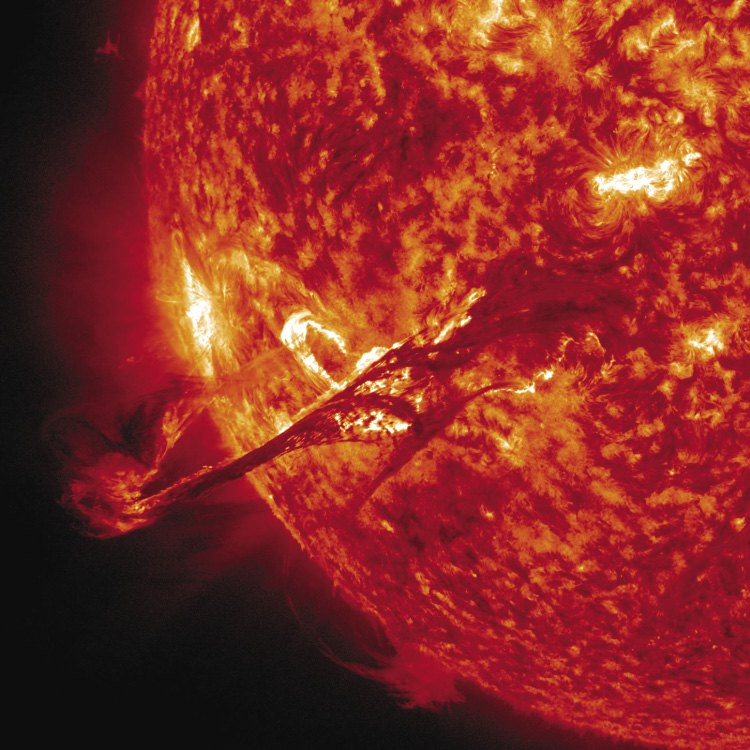

Figure 1: Image of a solar magnetic filament burst. Image by NASA. |

Better understanding the Sun and its role in climate change is an important but difficult goal. It is important because to properly assess the anthropogenic effect on climate change an accurate quantification of the natural forcing factors is required. But it is difficult because:

(1) natural forcing records are generally not well quantified;

(2) the response of the climate system to forcings is non-linear due to various feedback mechanisms and can only be estimated using complex climate models;

(3) in spite of their complexity models may not comprise all relevant processes and have to be validated, but the instrumental records of climate forcing and climate response are generally too short for this purpose and the data set needs to be complemented by proxy data;

(4) proxy data are derived from natural archives and are only indirectly related to the physical parameters of interest, and their calibration is based on assumptions that may not be fully valid on longer time scales;

(5) instrumental and proxy data reflect the combined response to all forcings, and not only the influence of the Sun. Furthermore, the climate system shows internal unforced variability. All this makes separation and quantification of the individual forcings very difficult.

The main aim of the first workshop of the solar forcing working group was to assess the present state of the art and identify knowledge gaps by bringing together experts from the solar, the observational and paleo-data, and modeling communities.

The workshop was organized jointly with FUPSOL (Future and past solar influence on the terrestrial climate), a multi-disciplinary project of the Swiss National Science Foundations that addresses how past solar variations have affected climate, and how this information can be used to constrain solar-climate modeling. FUPSOL also aims to address the key question of how a decrease in solar forcing in the next decades could affect climate at global and regional scales.

Here are some examples of open questions and problems that were identified in this workshop, and will be addressed in more detail in subsequent meetings:

• Physical solar models are not yet capable of explaining many observed features such as solar cycles and changes in total solar irradiance (TSI) and solar spectral irradiance (SSI).

• There are still unresolved discrepancies between different composites of TSI based on the same satellite data.

• Semi-empirical models are relatively successful in explaining short-term changes in TSI and SSI on time scales of days to years. However, on multi-decadal time scales input data and instrumental TSI and SSI data for comparison are missing.

• TSI and SSI reconstructions based on proxies suffer from large uncertainties in their amplitudes.

• The most recent minimum, between solar cycle 23 and 24, and probably also the upcoming minimum provide a glance of the Sun at its lowest activity level ever observed during the satellite era.

• UV forcing and possibly also precipitating particles have significant impacts on atmospheric chemistry and dynamics and need to be included in models.

• Detection and attribution of solar forcing is often hampered by volcanic eruptions occurring simultaneously. Strategies to separate solar and volcanic forcings could be to select periods of low volcanic activity (e.g. roman period), to consider regional effects that differ for different forcings, and to look for multi-decadal to centennial solar cycles with well-defined periodicities.

As an opening spectacle to the workshop, a medium-sized flare initiated a long, magnetic filament burst out from the Sun (Fig. 1). Viewed in the extreme ultraviolet light, the filament strand stretched outwards until it finally broke and headed off to the left. Some of the particles from this eruption hit Earth in September 2012, generating a beautiful aurora.

affiliation

Swiss Federal Institute of Aquatic Science and Technology (Eawag), Dübendorf, Switzerland; beer eawag.ch

eawag.ch

Publications

PAGES Magazine articles

2013

PAGES news

Lucien von Gunten, D.M. Anderson2, B. Chase1, M. Curran1, J. Gergis1, E.P. Gille2, W. Gross2, S. Hanhijärvi1, D.S. Kaufman, T. Kiefer, N.P. McKay1, I. Mundo1, R. Neukom1, M. Sano1, A. Shah2, J. Tyler1, A. Viau1, S. Wagner1, E.R. Wahl2 and D. Willard

The PAGES 2k Network and NOAA collaborate closely to optimize data compilations, and to build structures to facilitate ongoing supply and dynamic use of data. It is thus an successful example for a large trans-disciplinary effort leading to added value for the scientific community.

The PAGES 2k Network has formed to study climate change over the last two millennia at a regional scale, based on the most comprehensive dataset of paleoclimate proxy-records possible. In 2011, at its second network meeting in Bern, Switzerland (von Gunten et al. 2012) the network formally acknowledged that the envisioned data-intensive multi-proxy and multi-region study must be built on the foundations of efficient and coordinated data management. In addition, the group committed to PAGES general objective to promote open access to scientific data and called for all records used for, or emerging from the 2k project to be publicly archived upon publication of the related 2k studies.

Architects of the home for 2k data

The National Climatic Data Center at the National Oceanic and Atmospheric Administration (NOAA) offered to host the primary 2k data archive. They set up a dedicated NOAA task force to tailor the 2k data archive to the specific needs of the 2k project and to coordinate archiving with NOAA's data architecture and search capabilities. The 2k groups nominated regional data managers to provide input from the users’ end.

Over the last two years, the regional 2k data managers have worked closely with NOAA to tailor the database infrastructure and prepare the upload of the 2k data. In addition, they provided expertise to help promote improvements in NOAA’s archival of paleoscientific data in general. Since the data managers of the regional 2k groups are spread across the globe, the collaboration was organized around bi-monthly teleconference meetings under the lead of NOAA. In spite of the occasional unearthly meeting hours for some, the interaction between the 2k data and NOAA database groups has worked fruitfully, as the following achievements show.

The 2k database

The paleoclimatology program at NOAA has set up a dedicated 2k project site with sub-pages for all regional groups (www.ncdc.noaa.gov/paleo/pages2k/pages-2k-network.html). This page was created early in the project to provide the regional groups with a central place to continuously compile datasets considered relevant to their studies.

Populating the database

A two-step approach was applied for entering the 2k data into the database in order to serve demands for both speediness and thoroughness.

First, all records used for the first synthesis article on regional temperature reconstructions (PAGES 2k Consortium 2013) were made available on a “data synthesis products” page dedicated to the paper (hurricane.ncdc.noaa.gov/pls/paleox/f?p=519:1:::::P1_STUDY_ID:14188). This ensured that the records were made publicly available exactly at the time of publication and in a format that will remain identical with the data files supplementing the article. In a second step, all these records are currently being (re)submitted to NOAA with more detailed metadata information than before using a new submission protocol. Additionally, many new and already stored records that were not used for the PAGES 2k temperature synthesis are (re)formatted to the new submission protocol. This will allow improved search and export capabilities for a wealth of records that can currently only be accessed individually.

Improved data submission protocol

The data submission process is a crucial step for the long-term success of a database. On the one hand, it should contain as much relevant information as possible in order to maximize the value of the data. On the other hand, it should remain simple enough to keep the threshold for data providers as low as possible. The NOAA task force and 2k data managers therefore created a substantially revised submission template file. This new protocol allows including more comprehensive information relating to the proxy records, and, crucially, is organized in a structured format that allows machine reading and automated searching for defined metadata information. This is critical in order to maximize the usefulness of the data to other scientists, as it additionally allows them to reprocess underlying features of the records such as the chronology or proxy calibrations.

The new data submission template is also optimized for taking advantage of NOAA’s archival structure, which follows international conventions for data description and archiving, and the Open Archive Initiative Protocol for Metadata Harvesting. This allows PAGES 2k data to be visible beyond the NOAA web site.

A new feature of the NOAA-Paleo archive is the search capabilities that allow for project-specific searches by a logical operator (e.g. “PAGES 2K AND Monsoon”). Additional functionalities are planned that will, for example, allow the user to select a subset of proxy data for a region, and generate a single downloadable file of the requested data in NetCDF, ASCII, or ExcelTM formats.

2k data management - next steps

In the next phase of the project starting now, the 2k network will work on completing the database of paleoclimate records of the last 2000 years to eventually produce new synoptic climate reconstructions. The proxy records will be collected according to the new NOAA data submission protocol. The prior use of this template during the collection and analysis phases of the project has the following advantages: 1) all records are collected in the same, uniform format allowing for the inclusion of all relevant information, 2) the files are easily computer readable for data analyses, and 3) no additional formatting is required for the subsequent submission to the NOAA-Paleo archive.

For large, data-intensive studies a good data management strategy is crucial. The experience from the PAGES 2k project suggests that setting up a data manager team and involving archivist partners such as NOAA at an early stage of the project is key to handling data efficiently.

This new collaborative data storing effort is only possible thanks to the members of the regional 2k groups who provide their data and metadata inventories with the aim to make the global network of paleoclimate datasets publicly available.

affiliations

1PAGES 2k data managers

2NOAA Paleoclimatology branch members

Full affiliations listed here: pastglobalchanges.org/products/newsletters/ref2013_2.pdf

references

PAGES 2k Consortium (2013) Nature Geoscience 6: 339-346

von Gunten L, Wanner H, Kiefer T (2012) PAGES news 20(1): 46

Publications

PAGES Magazine articles

2013

PAGES news

|

Suzette G.A. Flantua1,2, H. Hooghiemstra1, E. Grimm3 and V. Markgraf4

The Latin American Pollen Data Base, better known as the LAPD, is an extensive database of pollen from peat and lake cores and surface samples. It covers the areas of Central America, the Caribbean, and South America. The database was launched in 1994 by a research group headed by Vera Markgraf at the University of Colorado, USA, and its management moved to the University of Amsterdam in 1998, where it was hosted by Robert Marchant at the Institute of Biodiversity Ecosystem Dynamics (IBED).

The LAPD started as a website where palynologists were encouraged to share their data. Unfortunately, after the project ended in 2003 no further updates were made to the website and the related database, and the list of pollen data of Latin America quickly became outdated. Recognizing the urgency for an updated LAPD, the group at IBED decided to revive it. Supported by three grants from the Amsterdam-based Hugo-de-Vries-Foundation (Van Boxel and Flantua 2009; Flantua and Van Boxel 2011), Suzette Flantua initiated a search for studies published after 2003. While the exploration for pollen records continues, this new “LAPD 2013” inventory contains 1478 pollen sites throughout Latin America, multiplying by a factor of three the number of sites compared with the last update in 1997 (463 sites). The number of countries represented has increased from 15 to 29.

In the meantime, the “NEOTOMA” database (www.NEOTOMAdb.org) was developed through an international collaborative effort of individuals from 23 institutions. This cyber infrastructure was designed to manage large multiproxy datasets, which makes it easy to explore, visualize, and compare a wide variety of paleoenvironmental data. This is why it was chosen as the primary archive site for the Global Pollen Database. The LAPD data in the 1997-list is now available through NEOTOMA (Fig. 1), and the complete 2013 LAPD inventory with metadata will soon be available through NEOTOMA Explorer (http://ceiwin5.cei.psu.edu/Neotoma/Explorer/).

Although all LAPD data will be incorporated in NEOTOMA, the LAPD database will still exist as an independent entity. There will be a LAPD page within the existing NEOTOMA website which can be used to obtain information on updated pollen sites, the pollen data, and events related to LAPD. It was also proposed to form a Latin American NEOTOMA group of paleoecological researchers to manage the database, and upload and control the quality of the data.

As almost no new data has been contributed to LAPD since 2003, IBED is in the process of digitalizing and uploading their pollen database to make a significant contribution to LAPD and thus stimulate global collaboration, and data input and use by other research groups. The data of the newest pollen sites will be kept on “standby” in an offline database and made publicly available once the related research papers are accepted for publication.

We would like to make researchers aware of the much richer palynological information now available for Latin America and we hope the pollen community will recognize LAPD-NEOTOMA as an important archive, where the original authors are cited and acknowledged to have contributed to the database. We emphasize the great opportunity to promote multidisciplinary research on a continental scale and international scientific cooperation.

We invite anyone with questions, doubts, or relevant information to contact us through Suzette Flantua and to support this global palynological initiative for an improved integration of knowledge and efforts.

affiliations

1Institute of Biodiversity Ecosystem Dynamics, University of Amsterdam, Netherlands; s.g.a.flantua uva.nl

uva.nl

2Palinología y Paleoecología, Universidad Los Andes, Bogotá, Colombia

3Illinois State Museum, Springfield, USA

4Institute of Arctic and Alpine Research, University of Colorado, Boulder, USA

references

Van Boxel JH, Flantua SGA (2009) Pilot study of the geographical distribution of plant functional types in the Amazonian lowlands. IBED, University of Amsterdam